Unlocking ML performance metrics: a deep dive

- Edwin Kuss

- 7 min

Evaluating how well a machine learning model performs is one of the most critical steps in the entire ML lifecycle. Performance metrics aren’t just “nice to have”—they determine whether a model is trustworthy, whether it generalizes well, and whether it should be deployed, tuned, or rebuilt. The right metrics help teams compare models objectively, detect problems early, and steer experiments toward meaningful improvements.

In machine learning, performance metrics typically fall into two major categories: regression metrics for predicting continuous values and classification metrics for predicting discrete classes. Each metric highlights different aspects of model behavior—accuracy, error magnitude, probability confidence, robustness, fairness, and more—making the choice far from trivial.

Selecting the correct metric can be challenging, especially when working with noisy data, imbalanced classes, or business constraints. Yet fair, consistent, and accurate evaluation is essential for building models that reliably perform in real-world scenarios. In this deep dive, we’ll break down the most critical ML performance metrics, when to use them, and how to interpret them effectively.

How to choose the right machine learning performance metric

Selecting the proper evaluation metric is one of the most important decisions in any ML project. A wrong choice can make a weak model appear strong, or hide problems that later surface in production. Below is a more transparent, more practical framework that helps teams pick the right metric with confidence.

1. Start with the business objective

Every metric should map directly to a real business outcome.

For example:

– If your goal is preventing churn, precision may matter more than recall because contacting the wrong users costs money.

– If you’re detecting fraud or security incidents, recall may take priority to avoid missing dangerous cases.

Ask: “What failure is more costly — a false negative or a false positive?”

2. Understand what each metric actually measures

Not all metrics behave well in all situations.

For instance:

– Accuracy looks impressive, but becomes meaningless when classes are imbalanced.

– Precision and recall give a more accurate picture when the positive class is rare.

– MSE vs. MAE impacts how sensitive your regression model is to outliers.

Choosing blindly can easily lead to a misleading interpretation of performance.

3. Match the metric to the task and data distribution

Different tasks require different metrics:

– Classification: Precision, recall, F1-score, ROC-AUC, PR-AUC

– Regression: MSE, RMSE, MAE, R²

– Ranking/recommendation: MAP, NDCG

– Imbalanced data: F1-score, ROC-AUC, PR-AUC

Your data’s characteristics — imbalance, noise level, outliers — determine which metrics are reliable and which are not.

4. Prioritize interpretability for stakeholders

Complex metrics don’t always help decision-making.

In some projects, simple metrics such as accuracy, precision, or MAE can convey results more quickly and clearly to product teams, managers, and customers.

If non-technical stakeholders cannot understand what a metric means, it won’t be helpful for decision-making.

5. Evaluate trade-offs and adjust thresholds

Most classification models allow you to adjust thresholds to balance false positives and false negatives.

This is essential when the cost of errors is uneven — for example, in fraud detection, medical diagnosis, credit scoring, etc.

Threshold tuning often delivers more practical improvements than changing the model itself.

6. Align with project-level and system-level goals

Ask what matters most in your context:

– high precision?

– high recall?

– balanced performance?

– ranking ability?

– calibration?

– robustness to new data?

Your “success metric” should reflect the real-world problem, not just academic convention.

7. Use the same metric set to compare models consistently7. Use the same metric set to compare models consistently

Model comparison becomes fair and objective only when all candidates are evaluated using the same metrics on the same data splits.

Consistency allows you to spot the best-performing model and track improvements over time.

Recommended ML metrics by task

ML Task | Best Metrics to Use | When to Use Them / Why They Matter |

Binary Classification | Accuracy (only for balanced data), Precision, Recall, F1-score, ROC-AUC, PR-AUC | Precision/Recall/F1 for imbalanced data; ROC-AUC for threshold-independent evaluation; PR-AUC when false positives/negatives have different costs |

Multi-Class Classification | Accuracy, Macro F1, Weighted F1, Confusion Matrix | Macro/Weighted F1 when class distribution is uneven; confusion matrix for detailed error patterns |

Imbalanced Classification | Precision, Recall, F1-score, PR-AUC, ROC-AUC | Avoid accuracy; use PR-AUC when the positive class is rare |

Regression | MAE, MSE, RMSE, R² | MAE when outliers matter less; MSE/RMSE when penalizing significant errors more heavily |

Forecasting / Time Series | MAPE, SMAPE, MAE, RMSE, MASE | MAPE for business forecasting; SMAPE for symmetric error evaluation; MAE/MSE for general forecasting reliability |

Ranking / Recommendation Systems | MAP, NDCG, Precision@K, Recall@K | Measures how well items are ranked; @K metrics assess performance in top results shown to users |

Clustering | Silhouette Score, Davies–Bouldin Index, Calinski–Harabasz Index | Unsupervised evaluation of cohesion and separation of clusters |

Anomaly Detection | Precision, Recall, F1-score, ROC-AUC, PR-AUC | Use recall when missing anomalies is costly; PR-AUC for highly imbalanced distributions |

NLP – Classification | Accuracy, F1-score, ROC-AUC | Language tasks often suffer from imbalanced datasets, so F1 is key |

NLP – Generation | BLEU, ROUGE, METEOR | Measures the quality of machine-generated text against the reference text |

Computer Vision – Classification | Accuracy, Precision, Recall, F1, ROC-AUC | Similar to standard classification, but often with more imbalance |

Computer Vision – Object Detection | mAP, IoU | mAP evaluates detection + classification; IoU measures bounding box overlap |

Computer Vision – Segmentation | IoU, Dice Score | Pixel-level evaluation of segmentation accuracy |

In conclusion

Selecting the right machine learning performance metric is not a one-size-fits-all decision — it depends on your business goals, the nature of your data, and the trade-offs you are willing to make. Whether you care more about minimizing false negatives, balancing precision and recall, or optimizing for interpretability, the key is to choose metrics that support your real-world objectives and revisit them as your project evolves. Fine-tuning thresholds and comparing metrics across different models helps ensure that your system performs reliably where it matters most.

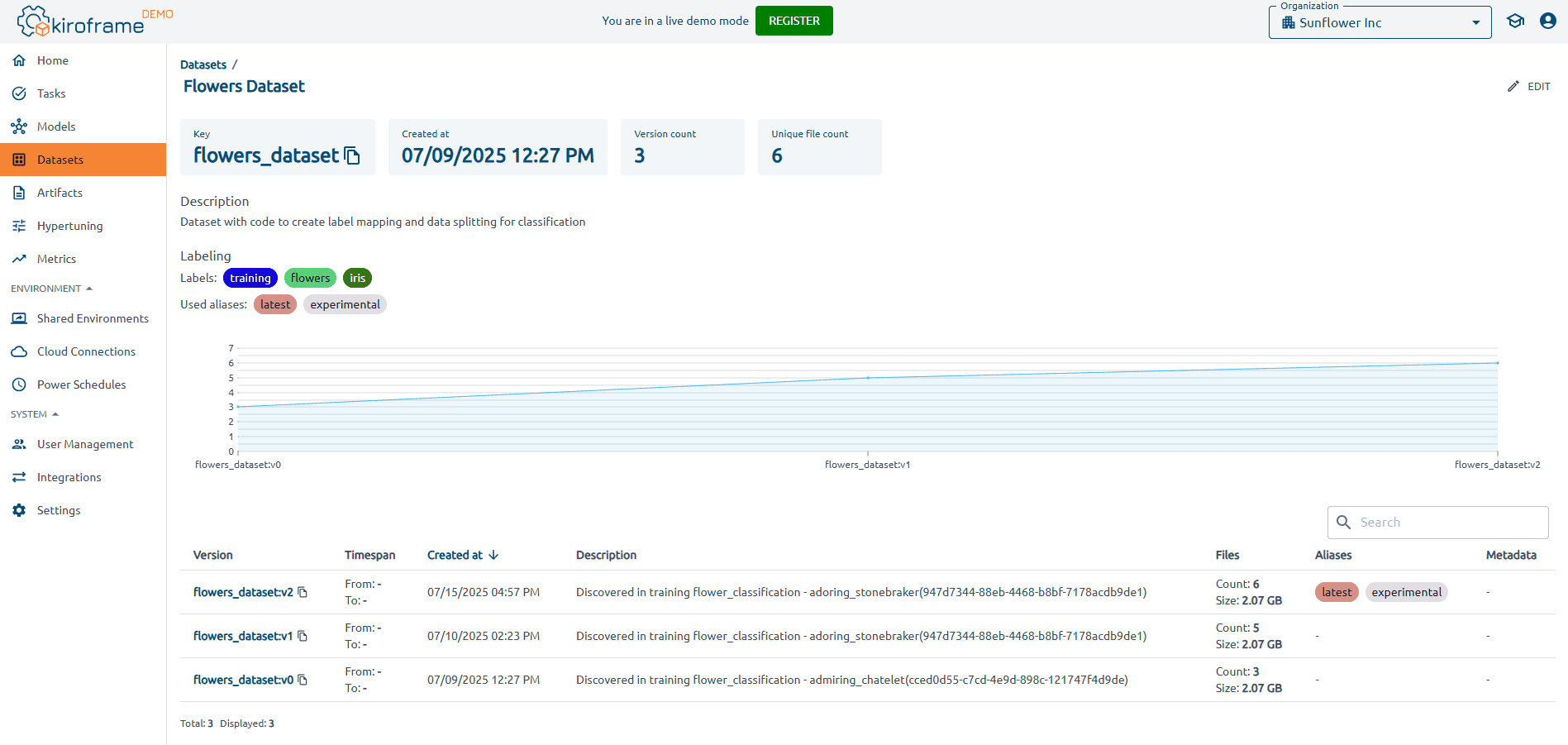

In practice, practical metric evaluation also depends on being able to track, visualize, and compare model behavior over time. This is where platforms like Kiroframe help—not by promoting any single metric, but by giving ML teams clear visibility into training and inference performance (CPU, GPU, RAM, latency, throughput) and providing structured experiment history. These capabilities make it easier to understand how metric changes reflect real system behavior and to support more informed, data-driven decisions.

Kiroframe provides complete transparency and offers MLOps tools such as ML experiment tracking, ML ratings, model versioning, and hyperparameter tuning → Try it out in Kiroframe demo