Manage artificial intelligence infrastructure effectively and scale machine learning operations

We respect your privacy. See our Privacy Policy. You can unsubscribe at any time.

Thank you for your request!

We will be in touch soon.

Kiroframe helps you fix the real problems of scaling ML

Limited visibility into infrastructure and runtime performance

Difficult to monitor model metrics and performance in real time

Too many manual steps in model training

Slow model rollout and unstable performance

Kiroframe helps teams scale machine learning in enterprise environments. The platform monitors ML infrastructure, optimizes resource usage, and brings together all ML operations — from training to deployment — in one place.

Start managing your AI infrastructure

Register now to explore how Kiroframe monitors and optimizes your AI workloads from day one.

How Kiroframe optimizes your ML infrastructure

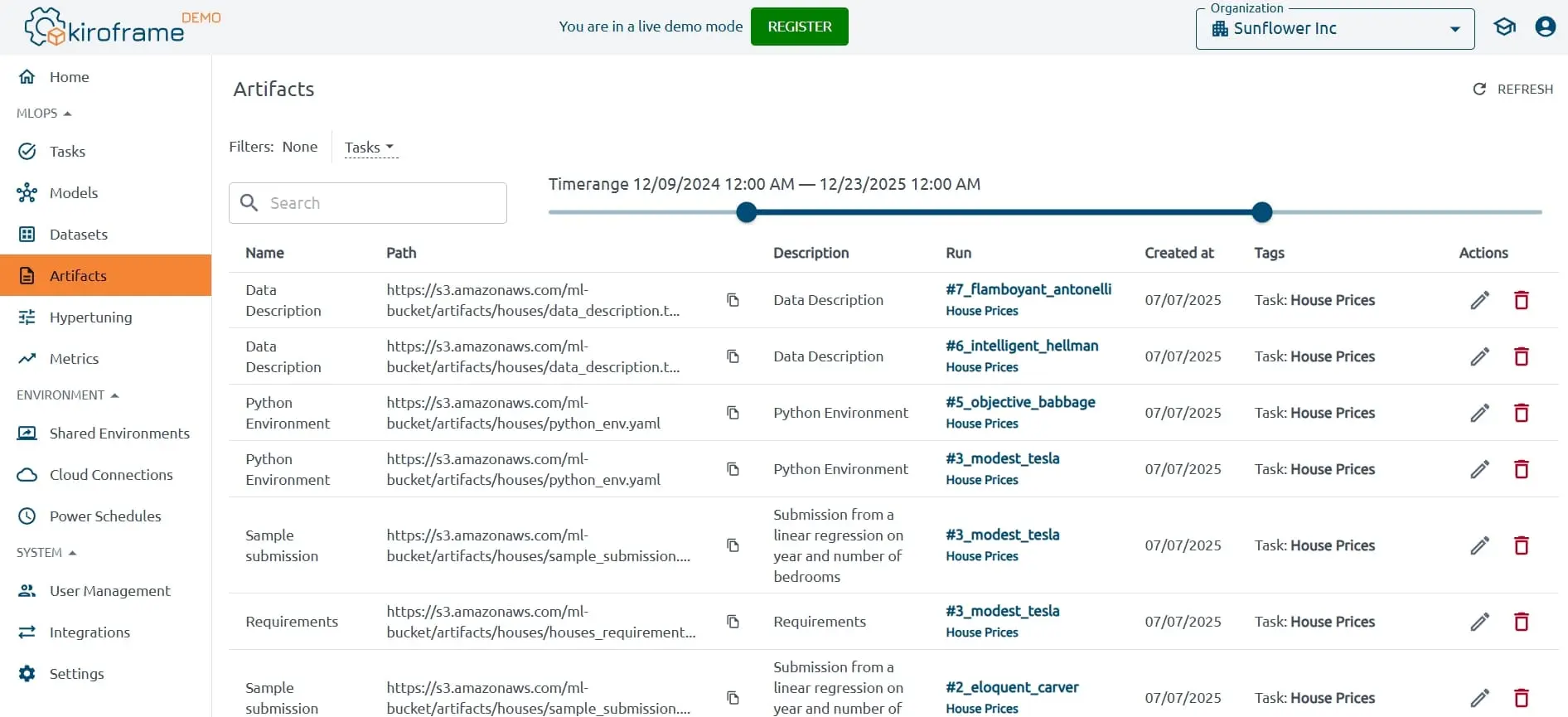

Centralized Data Storage

Cloud storage allows easy access and sharing of datasets across teams. This promotes collaboration and ensures that everyone is working with the most up-to-date data.

Helpful tips

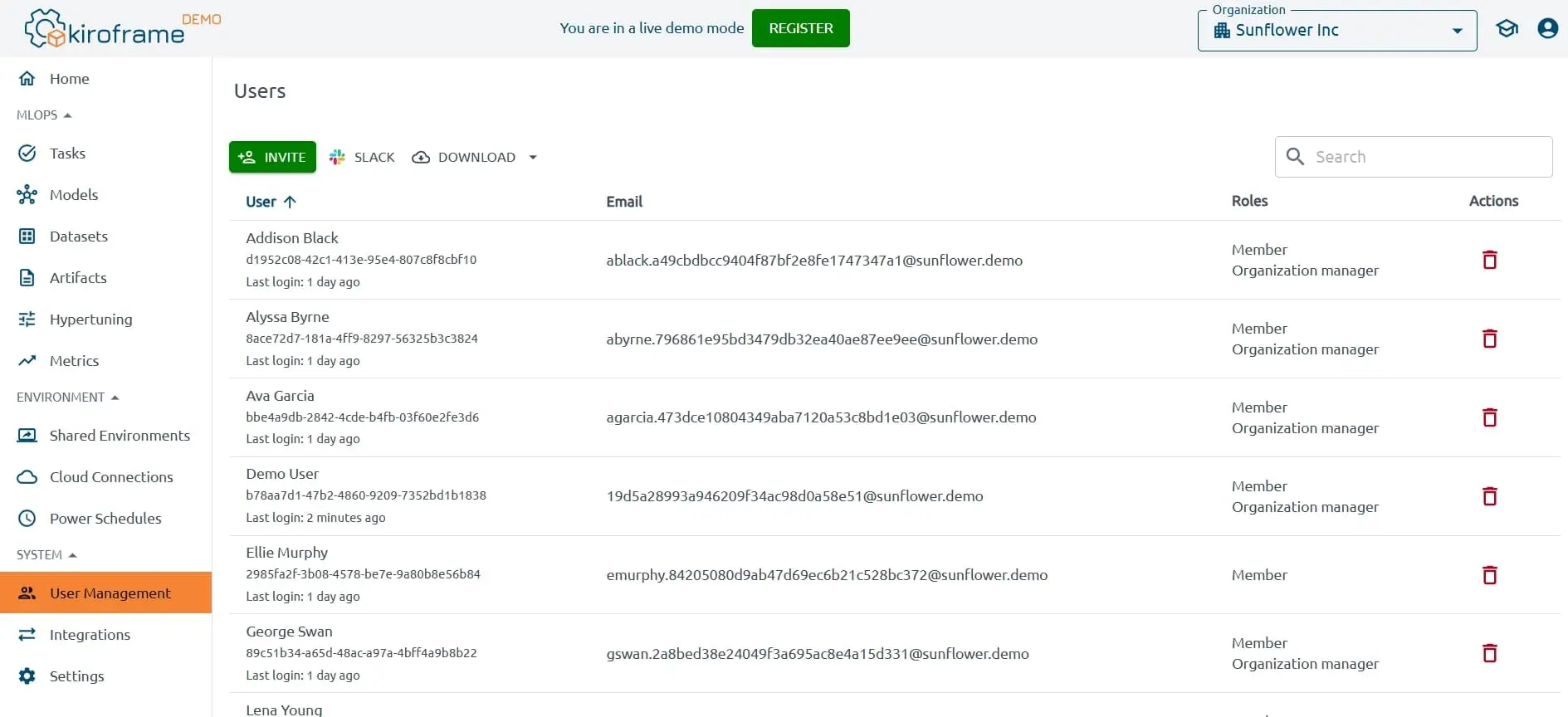

Use the task section to track and manage your machine learning experiments. Tasks let you visualize, search for, and compare ML runs and access run metadata for analysis.

Examine the models section to keep track of your machine learning models. Models are used to make predictions or decisions based on data. This can help you make informed decisions about resource allocation and identify opportunities for optimization.

Connect integrations to automate workflows and foster better teamwork across platforms.

Simplifies the hyperparameter tuning process by automatically launching multiple experiments.

Experiments can be executed in parallel on multiple instances, significantly speeding up the process.

Optimize and monitor your AI infrastructure in minutes

Get access to Kiroframe’s AI infrastructure monitoring platform and discover inefficiencies instantly.

Trusted by