Large language models models vs. small language models: how businesses choose

- Edwin Kuss

- 8 min

Table of contents

- What are language models in a business context?

- What defines LLM models?

- What are small language models?

- Large language models vs. small language models: enterprise comparison

- Real-world scenarios: how companies choose

- Common misconceptions and pitfalls

- How to choose between LLM models and small language models

- Why language model management matters at scale

- Conclusion

Language models have quickly become one of the most visible and impactful forms of artificial intelligence in business. According to recent industry data, approximately 65–67% of organizations now use generative AI tools powered by large language models (LLMs) across various workflows, indicating that these systems are now a core part of normal operations rather than niche experiments.

At the same time, the enterprise LLM market is growing rapidly, projected to expand from a multi-billion-dollar base in 2025 toward significantly higher value in the coming years, reflecting strong demand for advanced AI capabilities.

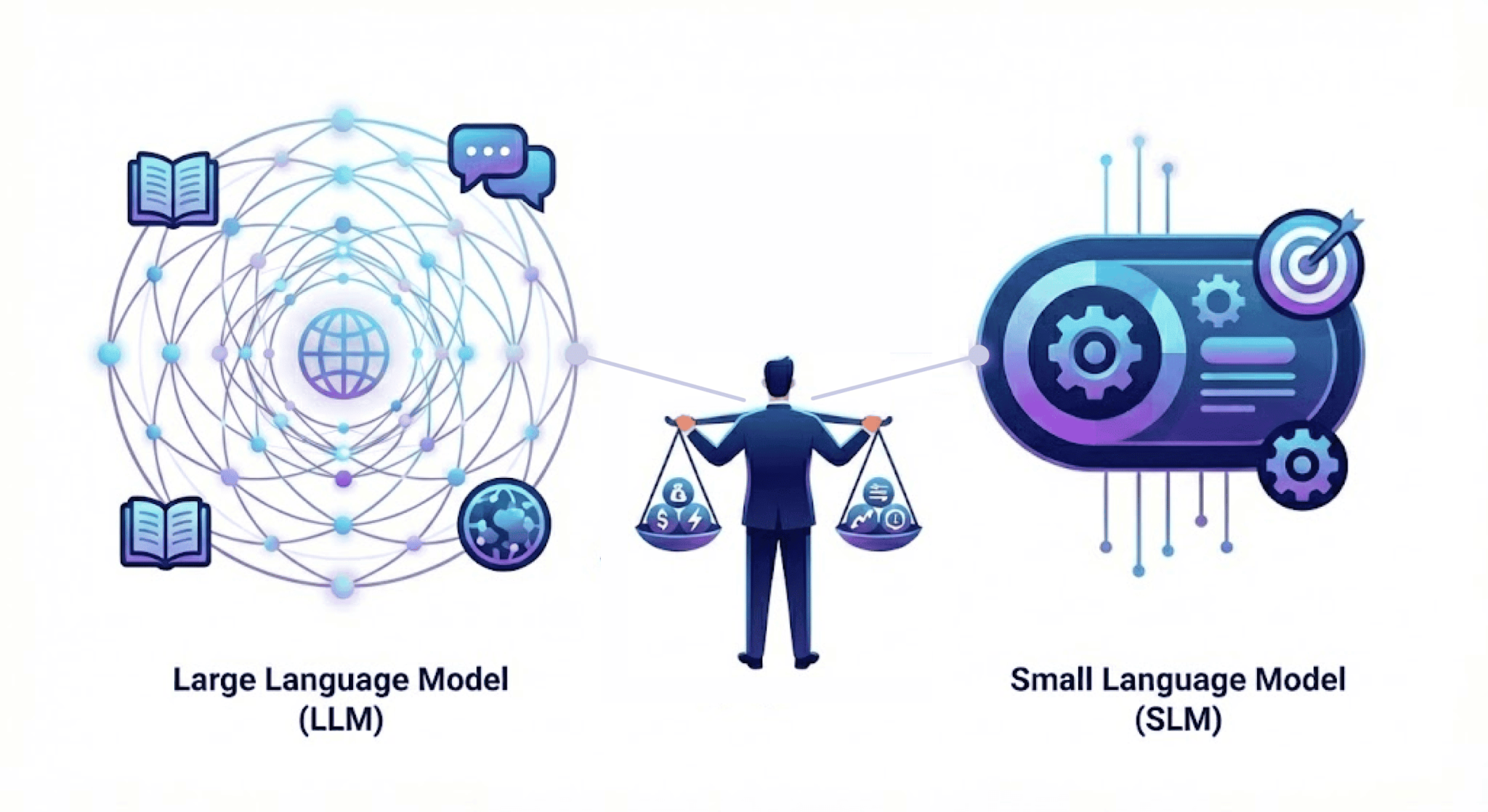

Despite this widespread adoption, not all business problems require or benefit from large models — and in many cases, smaller, task-focused language models provide a more efficient, cost-effective solution. This underscores the importance of understanding the differences between small and large language models and strategically selecting the right fit for a particular use case.

This article explains what language models are in a business context, how LLMs and small language models differ, where each approach fits best, and how to choose an AI model that aligns with real business goals rather than hype.

What are language models in a business context?

Definition and purpose of language models

Language models are AI systems designed to understand, generate, and transform human language. In business environments, they are used to process text-based information such as customer messages, documents, code, reports, and internal knowledge. Their purpose is not abstract intelligence, but automation, consistency, and scale in language-heavy workflows.

When companies ask, “What are language models?”, the practical answer is simple: they are tools that help organizations work with text faster and more efficiently — whether that means answering questions, summarizing information, classifying content, or generating responses.

Why size matters in language models

One key difference among language models is size, typically measured by the number of parameters. Larger models tend to be more flexible and capable of handling diverse tasks, while smaller models are more efficient and easier to control. Size affects not only intelligence, but also cost, speed, deployment options, and operational complexity.

For businesses, size is not a goal in itself. It is a design choice that directly influences how a model behaves in production.

What defines LLM models?

Core characteristics of LLM models

Large language models are built with a vast number of parameters and trained on massive, diverse datasets. This scale allows them to generalize across many topics, languages, and tasks without task-specific training. In practical terms, the question “what is LLM? can be answered as follows: a general-purpose language system capable of handling a wide range of text-based tasks with minimal configuration.

LLMs excel at understanding context, handling complex prompts, and producing fluent, human-like text. However, this versatility comes with higher computational requirements and less predictability.

LLM use cases in business

LLM use cases in business often focus on general-purpose language tasks. Common examples include customer support assistants that handle a wide range of questions, internal knowledge tools that search across documents, content-drafting workflows, and developer tools that assist with code generation or explanation.

Large language models for business are especially valuable when tasks are varied, open-ended, or complex to define with strict rules.

LLM models examples (high level)

Rather than focusing on specific vendors, LLM models’ examples can be grouped by function: general conversational assistants, code-focused language models, and multimodal models that work with both text and other data types. What they share is scale, flexibility, and broad applicability across domains.

What are small language models?

Key characteristics of SLM

Small language models are designed with significantly fewer parameters and a narrower scope. They focus on efficiency, speed, and control, often optimized for specific tasks or domains. When businesses ask, “What are small language models?”, the answer lies in their practicality rather than their breadth.

These models require less compute, respond faster, and are easier to deploy in constrained environments. They are also more predictable, which can be critical for production systems.

Typical use cases for small language models

These compact language models are commonly used for internal automation, classification, routing, sentiment analysis, and domain-specific text processing. They are well-suited for high-volume workloads where cost and latency matter, such as processing support tickets, tagging documents, or extracting structured data.

Because of their efficiency, small language models are also attractive for on-premises deployments and environments with strict data or infrastructure constraints.

Large language models vs. small language models: enterprise comparison

From an enterprise perspective, the choice between LLMs and SLMs is less about superiority and more about fit.

Performance and intelligence

LLMs are designed to handle complex, ambiguous requests and perform multi-step reasoning across a wide range of topics. Small language models, whileless flexible, more compact, and task-focused, typically excel when the problem is clearly defined and repetitive, often delivering more stable and predictable results. In business settings, higher intelligence does not automatically translate into better outcomes when it exceeds the task’s requirements.

Cost and infrastructure

Highly capable language systems usually demand significant compute resources, specialized hardware, and higher operational budgets, particularly as usage scales. More efficient SLMs can run on standard infrastructure and offer clearer cost predictability over time. For many organizations, infrastructure simplicity and budget control are more important than achieving maximum model capability.

Latency and speed

Latency is critical in production environments, especially for workflows that require near-real-time responses. SLM models generally respond faster and handle high request volumes more reliably. LLMs may introduce delays under load, which can negatively affect user experience or downstream business processes.

Customization and fine-tuning

LLMs are designed to perform reasonably well across many domains, but adapting them to specific terminology or internal workflows can be complex and resource-intensive. Small models, by contrast, are typically easier to fine-tune using domain-specific data and business rules. This makes them a strong fit for organizations with clearly defined processes and specialized language requirements.

Data privacy and deployment options

Enterprises with strict compliance requirements often favor small models because they can be deployed in controlled environments. LLMs are more commonly used in managed or cloud-based setups, depending on governance needs.

Large AI models are often deployed in managed or cloud-based environments, which can limit control over where data is processed and stored. Small language models, in contrast, are more commonly deployed in fully controlled environments, such as on-premises or private cloud environments. For enterprises operating under strict security or regulatory constraints, this deployment flexibility often becomes a decisive factor.

Taken together, these factors show that enterprise decisions are shaped less by raw model capability and more by how performance, cost, and operational constraints align with business objectives.

Real-world scenarios: how companies choose

When LLM models are the better choice

LLMs are often the right choice for customer-facing assistants, internal knowledge search, and creative or exploratory tasks. For example, a SaaS company may use an LLM to power a support chatbot that must handle a wide variety of questions without predefined rules.

In these cases, flexibility and language understanding outweigh concerns about efficiency.

When SLM models work better

Small language models are a better fit for structured, repetitive, or high-volume tasks. A logistics company might use a small model to classify incoming emails, extract key fields from documents, or route requests internally. Here, predictability and cost control are more important than broad reasoning ability.

When a hybrid approach makes sense

Many organizations combine both approaches. An LLM may handle complex interactions, while small language models process routine tasks behind the scenes. This hybrid strategy allows businesses to balance intelligence, cost, and control across different workflows.

The table given below summarizes how companies typically use small and large language models across common real-world business scenarios.

Scenario | Model type | Example models (illustrative) | Who typically uses them | Primary business purpose |

Customer support & assistants | Large language models | GPT-class models, general-purpose LLMs | SaaS, e-commerce, digital services | Handle diverse user questions and conversations |

Knowledge search & Q&A | Large language models | Retrieval-augmented LLMs | Enterprises, consulting firms | Search internal documents and knowledge bases |

Ticket classification & routing | Small language models | Fine-tuned compact text models | Support teams, IT departments | Categorize and route requests at scale |

Document extraction & tagging | Small language models | Domain-specific language models | Finance, logistics, and legal teams | Extract structured data from text |

Hybrid workflows | LLM + small models | LLMs + task-focused models | Mature AI teams | Combine flexibility with cost control |

Common misconceptions and pitfalls

One common misconception is that larger models are always better. In reality, oversized models often introduce unnecessary cost and complexity for simple tasks. Another mistake is assuming that small language models are outdated or incapable; in fact, they are frequently better suited to production environments.

Businesses also underestimate operational challenges, such as monitoring performance, managing drift, and controlling costs. Choosing a language model without considering these factors often leads to rework later.

How to choose between LLM models and small language models

Understanding how to choose an AI model in this context starts with clarity.

Start by defining task complexity. If the task requires open-ended reasoning and broad language understanding, LLMs may be appropriate. For well-defined tasks, small language models are often more effective.

Next, evaluate cost and scale. Consider not only current usage, but how workloads may grow over time. Then assess data sensitivity and compliance requirements, which may limit deployment options.

Finally, think beyond deployment. Long-term success depends on monitoring, updates, and governance. Choosing between LLMs and small language models is not a one-time decision; it is part of an evolving AI strategy.

Why language model management matters at scale

As companies deploy multiple language models across teams, managing them becomes increasingly complex. Models vary in cost, behavior, and risk, and without visibility and governance, AI systems can quickly become fragmented and difficult to control. Performance monitoring, cost tracking, and reproducibility become harder as usage grows.

As organizations scale their use of language models, the challenge increasingly shifts from choosing a model to managing AI workflows around it. Teams need visibility into experiments, datasets, model behavior, and performance over time — especially when multiple model types coexist.

This is where model-agnostic MLOps platforms, such as Kiroframe, become relevant. Rather than providing language models themselves, these platforms focus on organizing experiments, tracking artifacts, and maintaining reproducibility and governance across AI initiatives, helping teams treat language models as managed components within a broader AI system.

Conclusion

There is no universal winner in the comparison between LLM models and SLM. Each serves a distinct purpose and plays a vital role in modern business AI. The most successful companies are those that understand these trade-offs and choose models based on real operational needs rather than assumptions about size or intelligence.

By treating language models as business tools — and not just technical achievements — organizations can build AI systems that are effective, sustainable, and aligned with long-term goals.